This post is part of our NeurIPS 2023 Series:

- What is AI Safety?

- Data-Centric AI: Building AI Systems in the Real-World

- Synthetic Data: Diffusion Models

🚀 NeurIPS 2023 is here and Tenyks is on the scene and bringing you the inside scoop.

This article explores the multifaceted realm of AI safety, beginning with an exploration of the risks inherent in AI development. It then presents the nuanced facets of AI safety, clarifying misconceptions and distinguishing it from related concepts. Finally, the article sheds light on the cutting-edge discussions and advancements in AI safety, particularly at the NeurIPS 2023 conference.

Table of Contents

- AI Risks

- What’s AI Safety -and what’s not

- AI Safety at the edge — NeurIPS 2023

- Is e/acc wining over decel in NeurIPS 2023?

- AI-Safety in Computer Vision

- Conclusions

1. AI Risks

Before defining AI Safety, let’s first explore some of the risks inherent to artificial intelligence. These risks, shown in Figure 1, are the main drivers behind the rise of AI Safety.

According to the Center for AI Safety, a research nonprofit organization, there are four main categories [1] of catastrophic risks — a term referring to the potential for AI systems to cause severe and widespread harm or damage.

Malicious Use

- The concern arises from the fact that, with the widespread availability of AI, malicious actors — individuals, groups, or entities with harmful intentions — also gain access to these technologies.

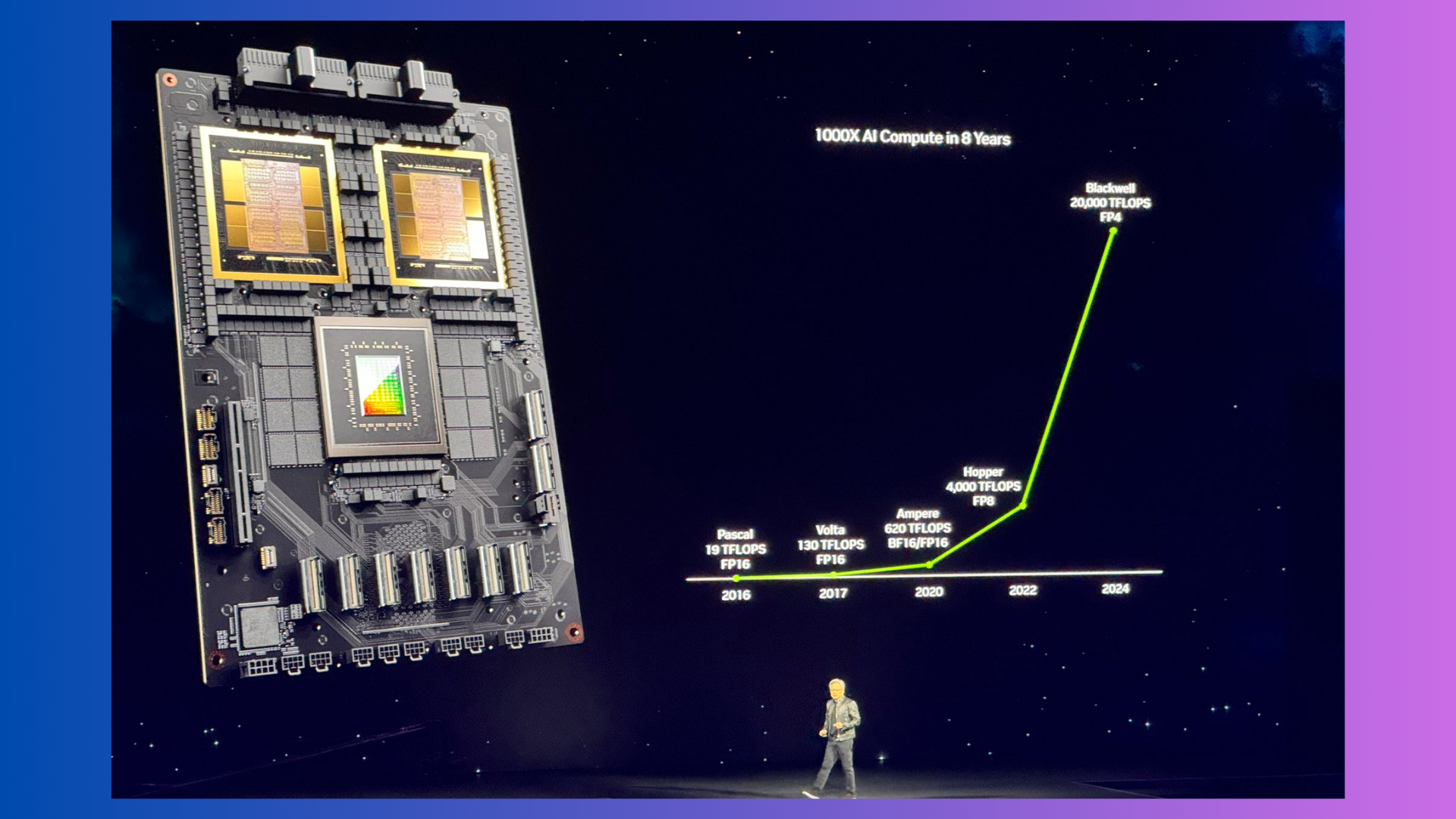

AI Race

- Governments and businesses are racing to advance AI to secure competitive advantages. Analogous to the space race between superpowers, this pursuit may yield short-term benefits for individual entities, but it escalates global risks for humanity.

Organizational Risks

- For AI, a safety mindset is vital. It means everyone in a group making safety a top priority. Ignoring this can lead to disasters, like the Challenger Space Shuttle accident. There, the focus on schedules over safety in the organization caused tragic consequences.

Rogue AI

- AI creators often prioritize speed over safety. This could lead to future AIs acting against our interests, going rogue, and being hard to control or turn off.

So, given the number (and severity) of risks around building/deploying artificial intelligent systems, what are we doing to mitigate such risks? 🤔

2. What is AI Safety? — and what’s not

As machine learning expands into crucial domains, the risk of serious harm from system failures rises. “Safe” machine learning research aims to pinpoint reasons for unintended behaviour and create tools to lower the chances of such occurrences.

Helen Toner [2], former member of the board of directors of OpenAI, defines AI Safety as follows:

AI safety focuses on technical solutions to ensure that AI systems operate safely and reliably.

What’s not AI Safety

AI safety is distinct from some common misconceptions and areas that people may mistakenly associate with it. Figure 2 provides some clarification.

3. AI Safety at the frontier— NeurIPS 2023

So, what’s the state-of-the-art in terms of AI Safety? We share two main works around AI Safety directly from the leading ML researchers presenting at NeurIPS 2023:

3.1 BeaverTails: Towards Improved Safety Alignment of LLM via a Human-Preference Dataset [3]

A dataset for safety alignment in large language models (Figure 3).

- Purpose: Foster research on safety alignment in LLMs.

- Uniqueness: The dataset separates annotations for helpfulness and harmlessness in question-answering pairs, providing distinct perspectives on these crucial attributes.

Applications and potential impact:

- Content Moderation: Demonstrated applications in content moderation using the dataset.

- Reinforcement Learning with Human Feedback (RLHF): Highlighted potential for practical safety measures in LLMs using RLHF.

3.2 Jailbroken: How Does LLM Safety Training Fail? [4]

This work investigates safety vulnerabilities in Large Language Models.

- Purpose: To investigate and understand the failure modes in safety training of large language models, particularly in the context of adversarial “jailbreak” attacks.

- Uniqueness: The work uniquely identifies two failure modes in safety training — competing objectives and mismatched generalization — providing insights into why safety vulnerabilities persist in large language models.

Applications and potential impact:

- Enhanced Safety Measures: Develop more robust safety measures in large language models, addressing vulnerabilities exposed by adversarial “jailbreak” attacks.

- Model Evaluation and Improvement: Evaluation of state-of-the-art models and identification of persistent vulnerabilities.

4. Is e/acc winning over decel in NeurIPS 2023?

As you might be aware of, when it comes to AI rapid progress, there is a philosophical debate between two ideologies e/acc (or simply acc without the “effective altruism” idea) and decel:

- e/acc: “let’s accelerate technology, we can ask questions later”.

- decel: “let’s slow down the pace because AI is advancing too fast and poses a risk for human civilization”

In NeurIPS 2023 e/acc seems to have won over decel

Out of 3,584 accepted papers, less than 10 works are related to AI Safety! 😱 As shown in Figure 5, even keywords such as “responsible AI” bring no better results!

What might be the reason for this? 🤔 Are academic researchers simply less interested in safety compared to private organizations?

5. AI-Safety in Computer Vision

Today’s ML systems are far from perfect. In extreme cases, they can grab and break a finger when they get confused. At Tenyks, focus on making sure your ML team can:

- Debug a model by finding undetected objects, false positives and mispredictions.

- Evaluate particular data slices to find the root causes of low model performance.

- Search for edge cases using multi-modal embedding search.

At Tenyks, we are pioneering tools that help ML teams in Computer Vision identify where a model is failing.

6. Conclusions

AI safety stands as one of the most pressing issues in artificial intelligence.

Recognizing the significance, leading AI research labs are actively engaged in addressing these challenges. For instance, OpenAI divides its safety efforts into three aspects: safety systems, preparedness, and superalignment.

In this article, before defining AI safety, we first highlighted some of the most threatening AI risks. With that in mind, it becomes more evident that current AI systems desperately need guardrails to avoid catastrophic threats.

Based on the number of submitted papers at NeurIPS 2023, it appears that private organizations are leading the charge in terms of AI Safety. However, we introduced two of the main works, focusing on AI Safety, presented this week at NeurIPS 2023, the leading conference in machine learning.

Stay tuned for more NeurIPS 2023-related posts!

References

[1] An overview of catastrophic AI risks

[2] Key concepts in AI Safety: an overview

[3] BeaverTails: Towards Improved Safety Alignment of LLM via a Human-Preference Dataset

[4] Jailbroken: How Does LLM Safety Training Fail?

Authors: Jose Gabriel Islas Montero, Dmitry Kazhdan

If you would like to know more about Tenyks, sign up for a sandbox account.