Your current data selection process may be limiting your models.

Massive datasets come with obvious storage and compute costs. But the two biggest challenges are often hidden: Money and Time. With increasing data volumes, companies have a hard time dealing with the huge size.

For any company, naively sampling small portions of large datasets (e.g. datasets of 1 million images or more) seems prudent, but overlooks immense value. Useful insights get buried in a haystack of unused data. For instance, how else do you overcome data imbalance if not with more data that triggers a balance?

In this post, we’ll unpack the two hidden costs of large datasets, and why current ways to leverage these datasets are expensive and inefficient.

Table of Contents

- Introduction

- An exclusive model-centric approach is narrow

- Why does this matter?

- How to identify your hidden costs

- Conclusion

1. Introduction

Most AI companies sit on massive amounts of unlabelled data, using only a fraction for model development. This selective approach seems pragmatic, but leaves unseen value on the table. Without the right data selection tools, critical datasets get buried in an avalanche of data, see Figure 1.

The result? Wasted resources, stagnating models, and missed opportunities. Large datasets come with obvious massive costs — expensive storage, high labelling costs, and costly training cycles.

From this set of costs, labelling or annotation costs might be the more underestimated when it comes to very large datasets. However, errors in data quality labels is often the most common problem ML teams face in training data, see Figure 2.

Yet the two biggest costs are invisible: money and time to transform large unlabelled data into the right labelled data. Companies lack solutions to identify and leverage their most valuable data assets.

2. An exclusive model-centric approach is narrow

Improving model architecture alone neglects other critical factors that impact success: it assumes the model itself is the solution, rather than a component.

This model-only perspective discounts how powerfully the training data can lift results. Flaws in dataset quality, coverage, labelling may be the true bottlenecks — no model tweak can compensate for poor data.

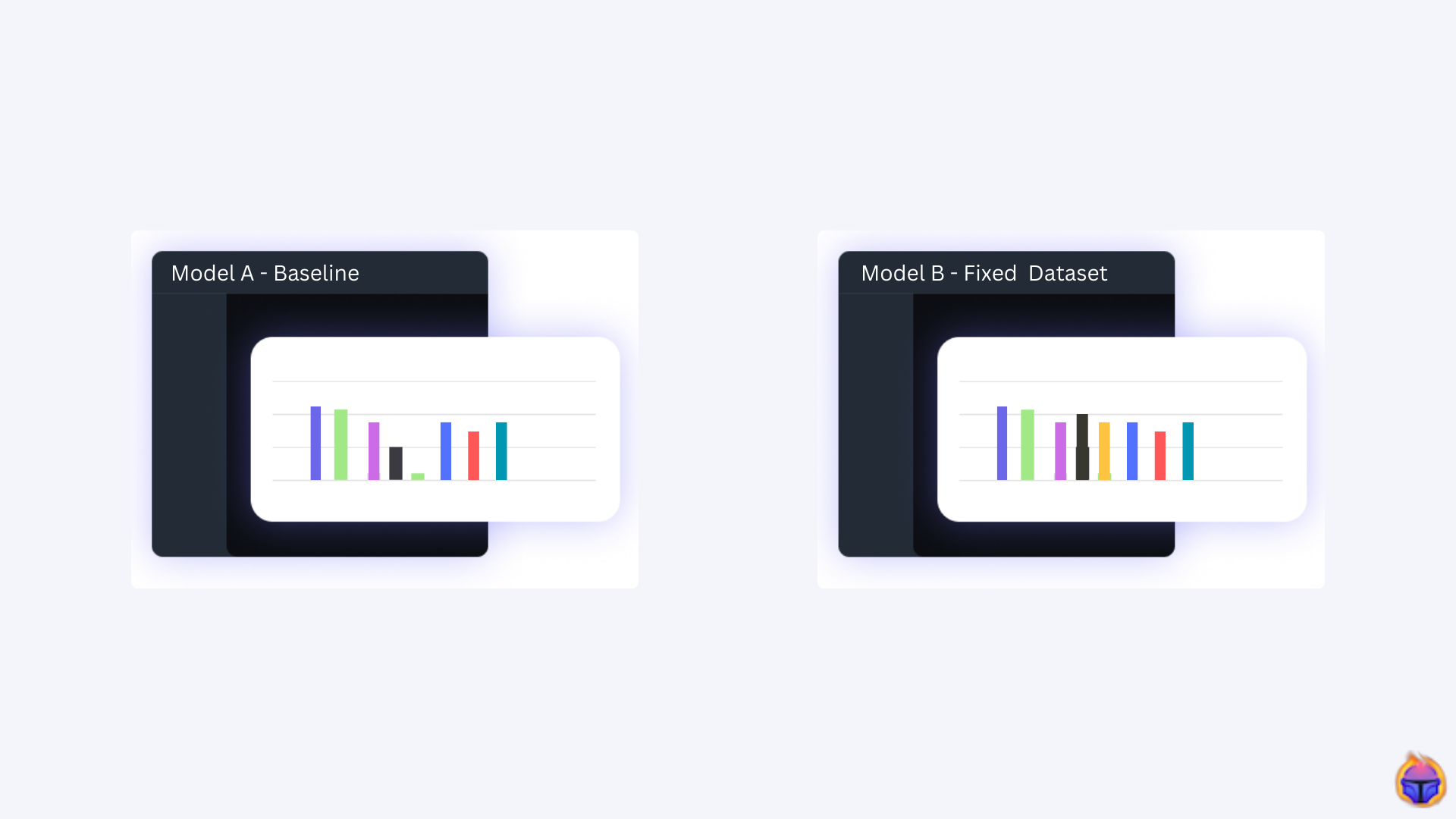

As Table 1 shows, when deciding between focusing on the model or the data, you are better off allocating your budget toward a data-centric approach.

A rigid model focus also rarely considers real-world deployment factors.

- How will performance differ outside pristine lab conditions?

- What if input data changes over time?

Modeling in isolation lacks insights from implementation experience.

The only certain way I have seen make progress on any task is:

You curate a dataset that is clean and varied, and you grow it, and you pay the labeling cost. I know that works.

Andrej Karpathy, CVPR’20 Keynote

Former Director of Artificial Intelligence @ Tesla

As Andrej Karpathy points out, simply accumulating raw data is not enough — the dataset must be intentionally curated for quality and diversity. This means inspecting data closely, cleaning irregularities, and enriching variety where needed.

Yes, substantial labelling time and cost comes with preparing large, high-quality training sets. But this investment is non-negotiable — it directly enables the model capabilities you want to develop.

3. Why does this matter?

There are several reasons of why using a low quality fraction of a large dataset might be limiting your ML system:

Model Behaviour

- Selection Bias. Using a non-representative subset biases the model — it sees only part of the full picture. This can skew model behaviour on new data that differs from the sampling.

- Underfitting. Insufficient data volume can prevent the model from fully learning key patterns. With only partial data, the model never achieves optimal fit.

- Overcorrection. If a validation or test set is disproportionate, it may incorrectly suggest needed tweaks. The model is then overcorrected to match a skewed sample. This happens very often in practice!

Silent Model Failures

- Edge cases. A subset may not sufficiently represent the full diversity of objects/scenarios. As a result, the model is more likely to fail on uncommon or edge cases it rarely/never saw during training.

- Poor generalization. Performance degrades slowly on new data distributions as the model faces more unfamiliar examples. The decline is gradual rather than sudden.

- Logical but incorrect predictions. The model makes high confidence but wrong predictions. For example, mistaking a visually similar object for the target.

3. How to identify your hidden costs

The root cause of the problem: too much data! 🙀

When models fail, our instinct is often to throw more data at the problem. But with massive datasets, size becomes the enemy. Extensive volumes hide vital data and create needle-in-a-haystack challenges.

Throwing all data at the model not only results in diminishing returns — marginal improvements at great effort and cost, it also triggers many of the problems we outlined in the previous section (e.g. silent model failures).

The root problem is not inadequate data quantity, but ineffective curation and sampling. More data just obscures the highest-value data you already possess.

ML teams need solutions to reveal and selectively utilize their most informative samples: the key 🔑 is transitioning from haphazard data collection to precise targeting of high-impact subsets.

Labelling costs 💰 in numbers

Labelling all data (i.e. the inefficient way to figure out which data is valuable) seems a brute force solution, but proves immensely expensive and slow. This naive approach make it difficult to identify the most valuable samples buried within massive datasets.

Assume we have a dataset of 100,000 images, where each image has 50 bounding boxes each, the total cost of labelling this dataset is $200,000 or 1,041 working days, see Figures 4, 5 and 6 for more details.

Now, what if rather than 100,000 images, you have 1,000,000 images? Assuming costs increase linearly, we end up with labelling costs of roughly $2M or 10,000 working days! 🙀

On top of this we might need to add the computing costs of training a set of 1,000,000 images. For simplicity, we only focus on the labelling costs in this article.

Identifying critical data in days — rather than years

Now, how do you solve this problem? One solution to this issue is to use approaches that select data intelligently. Active Learning [1,2] is one such approach.

Active Learning with techniques such as Coreset Selection [3] might enable your team to cut labelled data needs from millions of samples to targeted subsets of under 20,000. Likewise, required labelling time can be slashed from 10,000 days to only 100 days.

“The goal of active learning is to find effective ways to choose data points to label, from a pool of unlabelled data points, in order to maximize the accuracy.” [2]

With a team of just 10 labelers, companies can annotate critical samples in 2 weeks rather than the dozens of months demanded by exhaustive labelling.

😀 Stay tuned for future posts on Active Learning!

4. Conclusion

When working with massive datasets, more is not always better. Indiscriminate data aggregation obscures the relevant samples and incurs tremendous hidden costs in money and time.

ML teams targeting the right subsets of data, rather than using only a fraction of their data, can unlock the full potential of the unlabelled data they already possess.

Without zooming out to evaluate the entire machine learning pipeline, model-centric thinking can steer in unproductive directions. It tries reinventing the model while simpler solutions like data-centric approaches may be more impactful.

Companies that embrace precise, efficient data practices will leap ahead — saving time, money and realizing returns beyond what massive datasets could ever deliver alone.

References

[1] A Survey of Deep Active Learning

[2] Active Learning: Problem Settings and Recent Developments

[3] Active Learning for Convolutional Neural Networks: A Core-Set Approach

Authors: Jose Gabriel Islas Montero, Botty Dimanov

If you would like to know more about Tenyks, sign up for a sandbox account.