One model to rule them all. This is the myth many aspiring machine learning engineers ascribe to. Just build one exceptionally performing neural network, deploy it to production, and watch the magic happen, right?

In reality, developing reliable machine learning systems takes not just one model, but many models working together at different stages of the process.

In this post, we’ll explore how multiple models are needed throughout the machine learning lifecycle — from initial prototyping and data collection to deployment and monitoring.

Table of Contents:

- The Myth of the One True Model

- Models across the Machine Learning Lifecycle

- Conclusion: It Takes a Village of Models

1. The Myth of the One True Model

In the world of Machine Learning there is a common belief that if you just design the perfect neural network architecture, and train it on all available data, you will get exceptional performance that transfers seamlessly to real-world usage. But unfortunately, this is largely a myth.

🔗 Even if you had 1 Million images to train your model, there are hidden costs in large datasets. Learn more about these costs in our post here!

Relying on a single model, no matter how elaborate, leaves you vulnerable to a number of risks:

- Overfitting on biases in the training data that don’t generalize well.

- Failing to account for new data that differs from the training distribution.

- Lacking mechanisms to tune performance over time as use cases evolve.

- Lack of redundancy if the model crashes or needs to be updated.

Where does this Myth originate?

The myth of a single optimal machine learning model originates from academic research and human cognitive biases, but gets dispelled upon deployment to complex, shifting real-world environments, see Figure 1.

While intellectually appealing, a sole model proves fragile and limited in practice.

3. Models across the Machine Learning Lifecycle

TL;DR: There’s no One True Model, instead a reliable production grade ML System requires a number of models across the machine learning lifecycle.

3.1 Data Collection: Prototyping for Insights

- When first collecting an object detection dataset, you typically start with a small set of initial images to bootstrap the process.

- You would then train a very basic prototype model on this small dataset — for example a F-RCNN or YOLO architecture with just a few thousand images across the target classes.

3. The goal is not to build an accurate model yet, but rather to validate that the initial data can train a model at all, and to uncover any potential data issues or biases.

Finding errors in your dataset

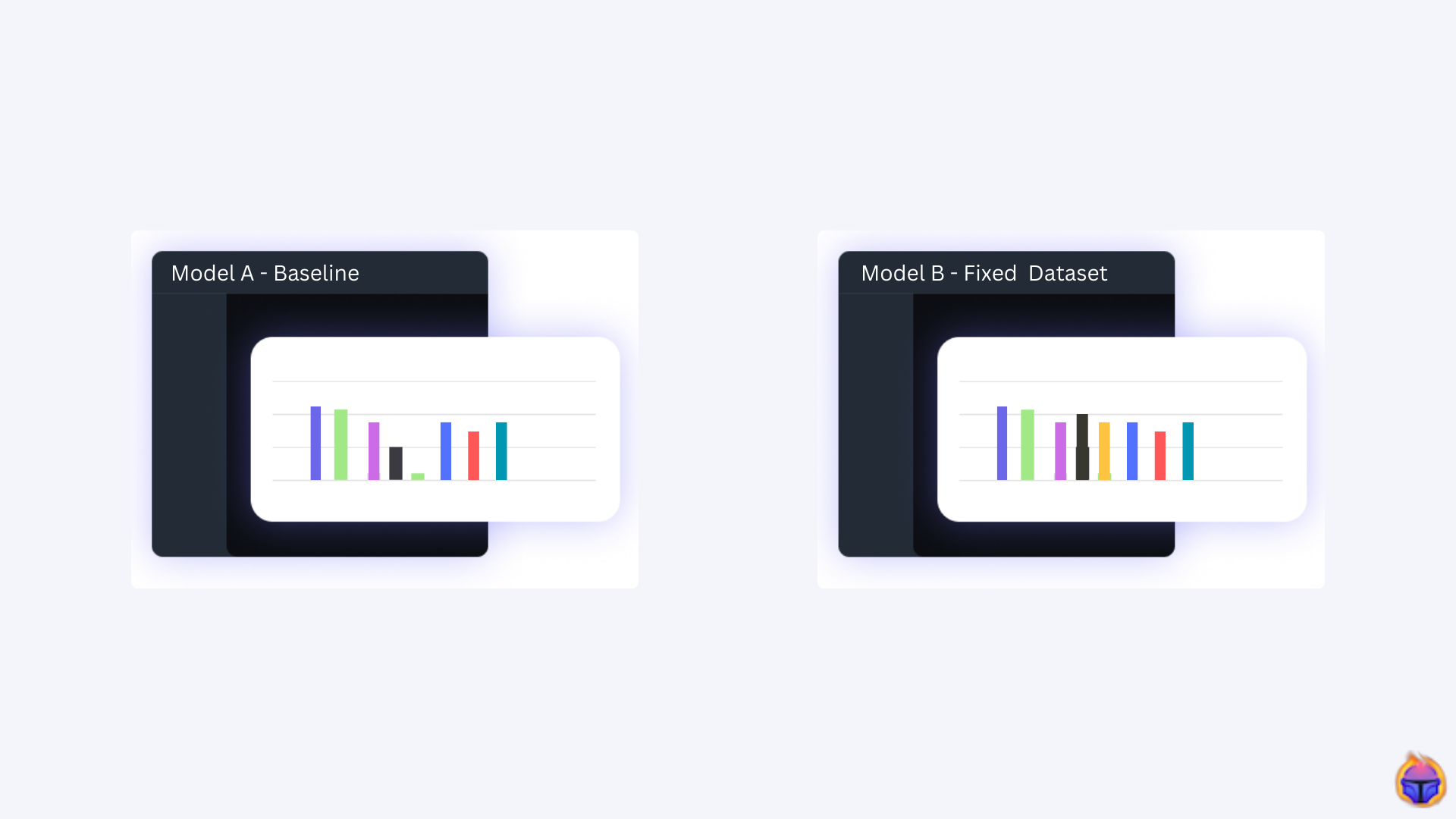

During this stage it’s crucial to collect high quality data. What if your model performance is quite low in this early stage? A solid first step is to explore your data to spot dataset failures (e.g. mislabelled samples).

You often have three options:

- i) build your own tooling

- ii) go open-source

- iii) use a platform with built-in features that can help you increase model performance by identifying dataset issues in record time. Disclaimer: Tenyks was built for this! (Figure 2)

3.2 Data Pre-processing: Finding the Optimal Representation

Once you have collected a dataset, the next step is determining how to pre-process and augment the data before feeding it into the model architecture.

1. Apply pre-processing and augmentation techniques.

2. Train between 2 and 3 models keeping the architecture constant, while varying the pre-processing and augmentation strategies:

- i) train one model with just normalization,

- ii) one model with normalization and flipping, and

- iii) one model with normalization, flipping, cropping, and color shifts.

3. Evaluate these 2–3 models on a validation set. The aim is to determine which combination of pre-processing and augmentation works best for your architecture and dataset, as shown in Figure 3 (Note: the values on this table are for illustration purposes only).

⚠️ Be aware that contrary to intuition, not all augmentations are beneficial. For instance, in this collaborative post with Roboflow, where we analyzed a traffic signs dataset, we discovered that horizontal flipping actually confused the model.

3.3 Modeling: Iterating for Performance

- Train at least 5 models with different architectures. In this stage, you want to track mAP during training to pick the optimal checkpoint for each model architecture.

- 2. After training the models, rigorously measure mAP for each model on a holdout validation set.

- 3. Also important is to evaluate inference speed and model size/complexity: there is a trade-off between accuracy and efficiency! (Figure 4).

3.4 Evaluation: Ensembling for Robustness

1. After training and evaluating multiple object detection models, the next step is to pick the top 2–3 best performing ones.

2. These are the models that achieved the highest validation mAP during the initial round of training and evaluation. Note: mAP is only a surface metric, meaning that it cannot answer questions such as why my model is performing well on Class Y, but it keeps failing on 60% of the samples in Class X — Tenyks was also built for this! 😃

3. Now, the next step may be to create an ensemble [5]: combine these top models together into one unified model. Why? The goal is for the strengths of the individual models to complement each other and cover each other’s weaknesses.

4. Common ensembling methods [1, 6] include:

- Averaging the bounding box predictions from each model

- Using a weighted average based on each model’s individual mAP

- Having a second model rescore the detections from the first models

- Chaining models so the output of one feeds into the next

5. This combined ensemble model is then evaluated on a holdout test set to get a final benchmark of its real-world performance.

3.5 Deployment: Optimizing for Production

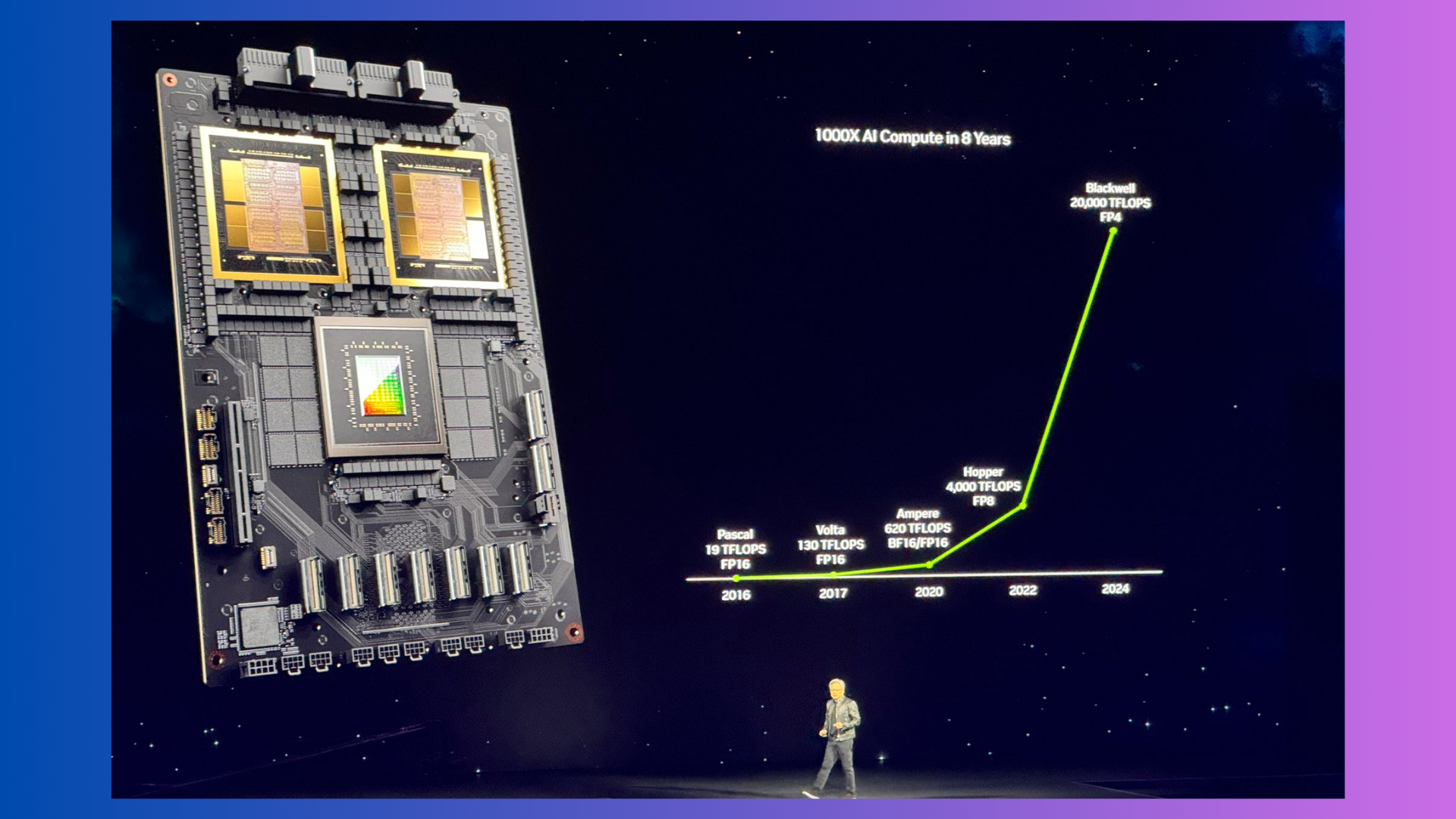

The next step is optimizing the model for deployment to production. In Figure 5 we show NVIDIA, and one of its servers for deployment, but in reality many other providers are available (e.g., Azure, AWS).

An open-source software that helps standardize model deployment and delivers fast and scalable AI in production. NVIDIA Triton

There are a few common techniques to optimize for speed and size:

- Pruning: Remove redundant weights and connections from the models. This approach can reduce size with minimal accuracy drop [2].

- Quantization: Use lower precision numbers like int8 vs float32. This technique makes models faster and smaller [3].

- Knowledge distillation: Train a smaller “student model” to mimic the ensemble “teacher model” [4]

For edge devices, quantization, pruning and compiling models into an optimized runtime like TensorFlow Lite can be useful. For cloud deployment, using optimized libraries like TensorRT or compiling via ONNX can improve latency and throughput. For mobile, CoreML can optimize and compile models for iOS.

Once optimized, re-test the ensemble on the holdout set to ensure no major accuracy regression. The optimized model can then be integrated into an application and deployed to production 🚀.

3.6 Monitoring: Maintaining Accuracy Over Time

Once the optimized ensemble model is deployed to production, ongoing monitoring is needed.

Logs from the application should record statistics like:

- Inference latency — is it meeting response time needs?

- Inference failures — are any images causing crashes or errors?

- Live precision/recall — how accurate are detections on real images?

By analyzing these logs, you can catch deployment issues early. Data from the logs can also be used to continuously add new training data.

Comparing production to training data

A crucial challenge in this stage is evaluating how well a machine learning model performs when faced with real-world, operational data as opposed to the data it was originally trained on.

This comparison is important for assessing the generalization and effectiveness of the model in practical scenarios. You don’t need to build this functionality from scratch; Tenyks can help your team compare production data to training data overtime, allowing you to automatically identify any potential distribution shifts in your system.

⚙️ Plan to continuously collect new training data

As new images or video frames are captured, they can be labelled and added to the training set. Periodically (e.g. monthly or quarterly), you retrain the model on this new accumulated data. This keeps the model up-to-date with changes in the application environment and use cases over time. The updated model can be tested for improved metrics and then re-deployed to production.

4. Conclusion: It Takes a Village of Models

So in summary:

- 1–2 models during prototyping

- 2–3 models for data preparation and augmentation steps

- 5+ models in the modeling stage

- Ensemble top models after evaluation

- Retrain and update models as new data comes in

The modeling stage demands rigorous iteration with multiple models to enable ensemble approaches that are more robust than single models. Yet assembling an ensemble is not a one-time endeavor — practical machine learning requires continuously expanding and integrating specialized models throughout the lifecycle.

We must not overlook the critical role of data quality at each stage

Clean, representative data is the lifeblood of reliable machine learning. Garbage in leads to garbage out, no matter the model sophistication. From curating diverse training sets to instrumentation for production monitoring, dedicating resources to solid data underlies success.

By obsessing over data quality along each step, we empower better models. In machine learning, perfect data does not exist — but the pursuit of perfection drives positive progress.

References

[1] Ensemble methods for object detection

[2] Methods for pruning deep neural networks

[3] Quantization and Training of Neural Networks for Efficient

Integer-Arithmetic-Only Inference

[4] Distilling the Knowledge in a Neural Network

[5] Object detection through Ensemble of models

[6] Building Flexible Ensemble ML Models with a Computational Graph

Authors: Jose Gabriel Islas Montero, Dmitry Kazhdan, Botty Dimanov

If you would like to know more about Tenyks, sign up for a sandbox account.